If Data Accuracy Is the New Water, Here’s How to Tap that Well

Unlocking Value from Your Organization’s Abundant Resource: Prioritizing Data Accuracy

If data is the new water, why are so many organizations drowning?

In conversations among analysts and within media discussions, data consistently emerges as a pressing issue necessitating swift resolution. There’s intense focus on the 5Vs of big data, highlighting its exponential growth in volume, velocity, and variety, often posing challenges in determining its accuracy and value. It’s emphasized that by harnessing data accurately, we can propel workforce productivity, streamline operations, and mitigate risks and costs.

While undeniably valid, these outcomes represent the ‘how’ rather than the ‘why’ of prioritizing and ensuring data accuracy.

The #1 Reason to Get Control Over Your Data

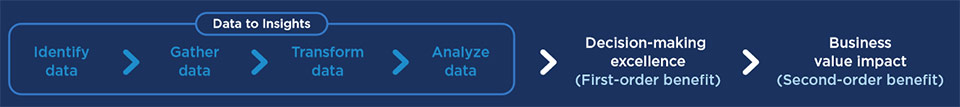

The best reason to improve data accuracy, access, and searchability is to drive better decision making. Despite myriad technology innovations – from SaaS business applications, APIs, and data lakes to cloud-based storage and ultra-fast backups – data is still mired in applications, databases, and devices across your organization. Thus, instead of going to a central well to draw forth data, your business teams and users must use “pipes,” or various connections linking systems.

They also sometimes bootstrap data gaps with manual processes, such as when your teams retype data in Excel spreadsheets. As a result, the “data water” that your teams hope is clean and usable is often dark and dirty, impeding their ability to use it for decision making.

Caption: Qlik/IDC Report.

The Race to Develop Analytics Is On

Fortunately, the C-suite at your company is well-aware of this problem. That’s why 87% of CXOs surveyed by IDC say that developing a more intelligent enterprise is their #1 priority for the next five years. According to IDC, CXOs want to improve data decision making by:

- Spending 80 percent of the time on analytics, and just 20 percent on data preparation. (Today, the reverse is true for most organizations.)

- Evaluating data needs during the problem definition phase of initiatives (including its extent, condition, and reliability).

- Practicing data-driven decision making and eliminate human bias. (That’s especially important as organizations increase their use of artificial intelligence (AI) and machine learning (ML)).

- Ensuring that teams and businesses make data-driven decisions rather than defaulting to experience or instinct.

Mastering Data Has Its Rewards

Organizations that achieve data-driven decision making:

- Drive 2X the business value as their peers with the worst capabilities.

- Improve revenue, operational efficiency, and profitability by an average of 17% (so say three in four respondents).

Source: Qlik/IDC.

Why It’s So Hard to Manage and Control Data

So far, so good. With such a payoff, it may seem surprising that more organizations haven’t achieved data mastery. However, there is a good reason. Data, it seems, is difficult to conquer. The CXOs IDC surveyed say they are struggling with:

- New data types (45%)

- New external data (40%)

- New internal data (45%)

- Major architectural changes (30%)

- New KPIs (38%)

- New analytics (47%)

Up to 55% of all data is “dark data.” That means that organizations collect it, but don’t use it, forfeiting a golden opportunity to learn more about their customers, businesses, and operations.

How Many Data Types Are There?

It’s impossible to know just how many data types there, as new content is being created all the time. What’s more helpful to is to understand data categories. According to data scientist Michael Gramlich:

- Structured data is data that is well-organized. Surprisingly, it’s not always easy for machines to read and interpret. Examples include Excel and Google spreadsheets, CVS files, and relational database tables – all content created by humans.

- Semi-structured data is data with some degree of organization. Examples include TXT files, HTML files, and JavaScript files. So, this group includes organized emails, web pages, social media, chat and voice transcripts, and more. This data is typically captured by organizations’ content management systems (CMS), but may have weak search capabilities.

- Finally, unstructured data has no predefined organizational form or specific format. Examples include IoT analog signals, texts, pictures, video, human speech, and sound files. This data is often difficult to interpret, which is why AI and ML are so important for search and analytics applications.

Where Your Organization’s Data Is Stored

Just where is your organization’s data stored? As the graphic below demonstrates, it’s likely that it is stored in a wide array of places: across data centers, cloud, at the edge, and in other locations. That presents a challenge for data science and analytics teams that want to surface data and make it more usable.

As IoT applications grow in popularity, even more of this data will push towards the edge. Organizations that want to operationalize it will have to become expert at choosing the right data from the vast torrents of inputs to capture and analyze.

Source: Seagate/IDC.

What Are the Risks of Using Bad Data?

So, what happens if your organization can’t solve its data water challenges and get good, clean data to interpret? Your leaders and teams run the risks of making bad decisions.

- In manufacturing, this could mean issues with product innovation, inventory management, supply chain delays, product quality or machine issues, unplanned downtime, and regulatory risks, among other problems.

- In financial services, bad data can lead to excessive credit, financial, operational, compliance, and legal risks, among others.

- In healthcare, poor data can lead to an inability to spot new trends, inaccurate population planning, misaligned services and drug interventions, and more.

It’s easy to see that poor data causes significant business harm across industries. It mires your organization in present-day challenges, while keeping you from identifying and pursuing profitable new opportunities.

How You Can Extract Insights from Vast Data Volumes

Fortunately, poor data, challenging collection and management practices, and difficulties creating analytics don’t need to be your destiny.

When you deploy an AI-powered search engine from Sinequa, you can finally aggregate and analyze all your data. Sinequa provides 200 connectors to its intelligent search platform, to support 350 different document formats. And these numbers are growing all the time. Business applications? Employee emails? Social chatter? M2M connections?

Whatever the format, Sinequa can harness it for search. Our business search engine extracts meaning from your content using natural language processing (NLP), AI, and ML. Users find the content they want, in an easy-to-use interface that also ranks results for relevance.

Imagine accessing the data when and where you need it, being able to enrich this data for use in analytics, and provide it to business teams for decision making. Imagine being certain that the data you see is comprehensive; up-to-date; and easy for your teams to access, view, and analyze.

If you’re experiencing data “leaks,” or the loss of valuable data you need for decision making; collecting “dark data” that’s never used; or using your data science team’s time and talent on data cleaning and interpretation, rather than analysis, you need a cognitive search platform.

Sinequa can help you get started and drive to results faster than you think. Make this year the one you finally master your data challenges and exploit the full power of the wealth of content you, your business, your competitors, and your customers produce.