Will ChatGPT make Search Obsolete?

There has been a lot of buzz around ChatGPT, the conversational AI bot based on the latest large language model (LLM) from OpenAI. The internet has exploded, marveling at its impressive technology. Many are even describing ChatGPT as one of those “aha” moments when you can feel that the world’s future has changed with a whole new idea of what’s possible. And I’d say they’re right; this is an extraordinary example of advanced AI! But an important question you may be asking is how can ChatGPT be used in business, and what does it mean for search?

ChatGPT offers some never-before-seen AI capabilities and has the ability to:

- Generate language that is more humanlike than ever before

- Speak intelligently on a broad domain of subjects

- Engage in conversation while remembering prior exchanges

Can I use ChatGPT for my business?

While ChatGPT has many implications for what AI will do for business in the future, it won’t have much impact in the short term.

ChatGPT is free and available today as an interactive chatbot and as an API, but this is only temporary. OpenAI wants to learn from its use and improve the technology. Of course, running it costs money, so free access will surely disappear and will likely be replaced by a more powerful paid model.

Can I train my own version of ChatGPT?

Not yet. You can count on one hand (maybe two) the companies that have the resources and the skills to do what OpenAI has done. OpenAI isn’t saying much about the model ChatGPT was based on (GPT-3.5), but we know that GPT-3 was trained on 300 billion words (that’s about 1.2 billion pages of text!) and has 175 billion parameters. It requires a massive investment to train an LLM of this size.

Why is ChatGPT getting so much attention?

So, why all the hype? On one hand, ChatGPT is an amazing technology. On the other hand, it’s easy to get excited about anything new and anticipate what’s next to come. Computers and GPUs are faster, algorithms are stronger, and models are more sophisticated. The technology horizon moves quickly, and right now, nothing is moving faster than the advancement of LLMs (AI applied to natural language processing, sometimes called foundation models).

Amid the impressive results that ChatGPT creates and its media hype, it’s easy to overlook its weaknesses. Often we are enamored by impressive results and fail to think critically about what a new technology can’t handle.

Generative Models

ChatGPT’s strength is in the G, which stands for Generative. ChatGPT doesn’t point you to something that already exists. Rather, it generates – creates something brand new – every time. That makes it excellent at doing some things humans do, like telling a story, writing an essay, or even generating a poem. And for these things, especially when they are fictional, ChatGPT is nothing short of astounding – it’s a talk-with-me-like-I’m-human AI, and it talks back like a human.

But this strength for creation becomes a weakness when “new” or “unique” isn’t a goal. ChatGPT doesn’t actually understand anything. It’s only capable of recognizing patterns and writing text based on those patterns. Since it relies only on patterns, you can’t be sure that it’s going to have its facts straight. For instance, Ben Evans (a VC investor with a strong internet presence) asked ChatGPT to write his bio – and what it created sounded very convincing. But many of the facts in the bio were wrong (for instance, he hasn’t written any books). ChatGPT did what it was trained to do – it used patterns to create a bio that was representative of people like Ben Evans. But it was wholly incapable of writing a factual bio of a particular person.

Using Generative Models for Search

ChatGPT can also answer questions, and it often does a very remarkable job producing informative and insightful answers. As a result, some have said that ChatGPT can replace search, and Google is in trouble. If an answer to any question – not a list of results, but an explanation in natural language – can be generated on the fly to any query…why do we need Google? Why would we need search at all?

It’s a fair question. But for that future to become a reality, ChatGPT fails in three ways:

- Cost

- Accuracy

- Nuance

Cost

Low cost will come in time, but right now, ChatGPT costs a lot to run and is prohibitive to train. In fact, it doesn’t know anything that happened after Q3 2021 because it took so much time and money to train. OpenAI spent more than a year getting it ready! That’s fine for learning about the Renaissance, but would you ask your R&D team to ignore anything done in the last 12 months?

Accuracy

Accuracy is ChatGPT’s Achilles’ heel. As a generative model, it is a probabilistic “black box.” That means you don’t know the source of its information, why it picked that material, or the context that was used in the generation process. There is nothing to tell you if it’s right or wrong, and ChatGPT doesn’t know either. It will answer your question with complete confidence. It’s kind of like punching numbers into a calculator: the calculator always appears correct. If adding up your monthly grocery bill comes to $4,286.37, the calculator thinks it’s fine; it gives you no insight to realize that there’s been a mistake. But unlike a calculator, ChatGPT can make errors in the “calculation,” too.

Nuance

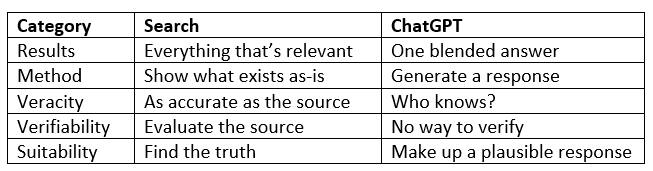

The final shortcoming is nuance. A generative approach produces one result. Giving one answer is fine for simple factual queries (“who is the president of the United States”) but very little of the world is so black and white. Many topics are nuanced, and a proper understanding requires a review of several perspectives from multiple sources and choosing the most appropriate content based on context. This is what search engines do – they provide a list of relevant results. In the search world, this is called high recall – all of the relevant information is available. But ChatGPT is utterly incapable of broad recall. It might integrate information from more than one source, but it ultimately gives only one blended result.

Pick the Right Tool for the Job

When accuracy and/or nuance are important, a generative approach is the wrong tool for the job. To know what’s right and to see all the information, you need to see everything as it already exists – that’s exactly what information retrieval (also known as search) does. In other words, “show me all the information there is, don’t fabricate a summary based on patterns.”

Summary: ChatGPT vs. Search

ChatGPT and other LLMs (or foundation models) like it are amazing at generating text (particularly fiction) and imitating styles. Their seeming mastery of language and their unwavering confidence makes them seem like excellent question-answer tools. But they are designed to address a different problem than search engines:

Fun with ChatGPT

I’ll close with an example of ChatGPT answering a very difficult question. I was trying to think of something easy for a human but hard for an AI to answer because it probably hadn’t seen it before. So I asked:

How can I use a soccer ball and a brick like a golf ball and tee?

It responded:

To use a soccer ball and a brick like a golf ball and tee, you can place the brick on the ground and rest the soccer ball on top of it.

That is absolutely amazing! It kept going…

This will mimic the setup of a golf ball and tee, allowing you to practice your golf swing with the soccer ball. Keep in mind that the soccer ball will not behave exactly like a golf ball, so you may need to adjust your swing accordingly.

Ok, I’m not sure I can “adjust” my swing enough to make this useful, but hey, it’s an AI. However, ChatGPT wasn’t finished:

Additionally, make sure to choose a sturdy brick that will not break or crumble under the weight of the soccer ball.

Oh, ChatGPT! You were soooo close!

The Future is Bright

So, while ChatGPT is an impressive milestone and a window into the future of AI, it’s not a replacement for search. But it’s also just one approach to using LLMs, and there are many more; for instance, Sinequa already uses LLMs as a foundation for its Neural Search technology. As LLMs continue to advance and costs continue to fall, one thing is certain: this is just the beginning!